Imagine nothing. Got it? Now, imagine that not even nothing exists. For after all, nothing is something. At the very least “nothing” implies its opposite, and I’m asking you to imagine a time before opposites are even possible, before time is possible.

Then, imagine a point, the way geometry defines a point, with no dimensions. This point is something. But it can exist for only a billion-trillionth of a second — although a second is something that doesn’t really exist yet. The word “yet” implies that a future does exist, however, and in that infinitesimal fraction of eternity the point — which is everything that exists or ever will exist — physicists tell us that the point “expanded,” although that word cannot adequately express the explosion. In fact, the universe ejaculated into both something and nothing. It gave rise to particles and antiparticles and we were off to the races.

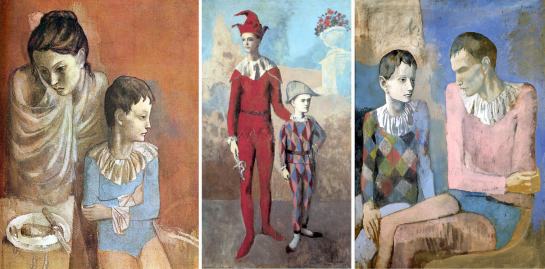

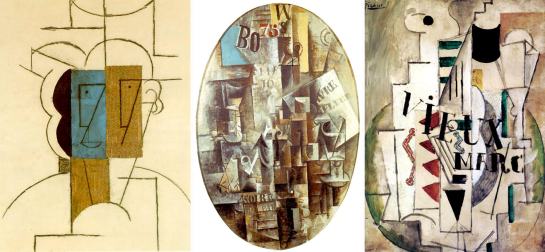

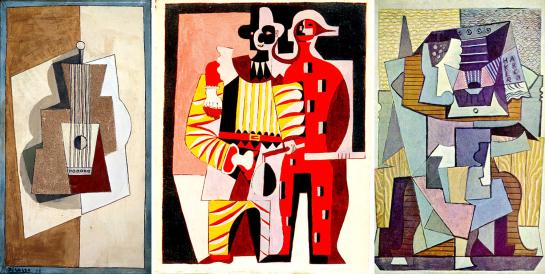

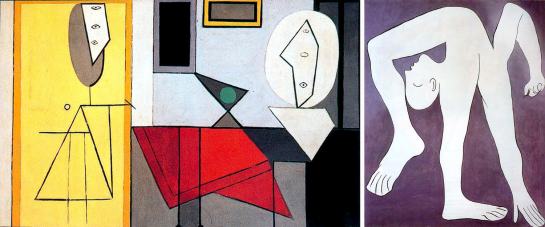

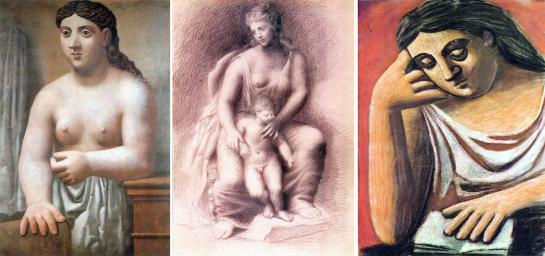

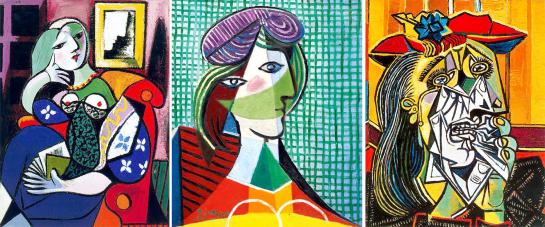

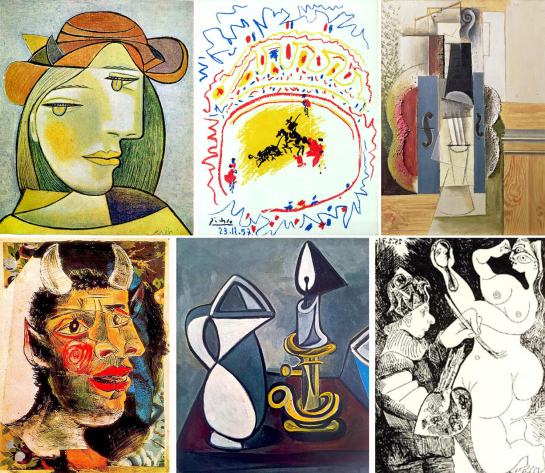

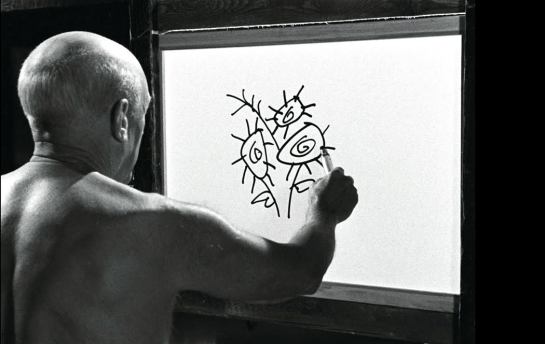

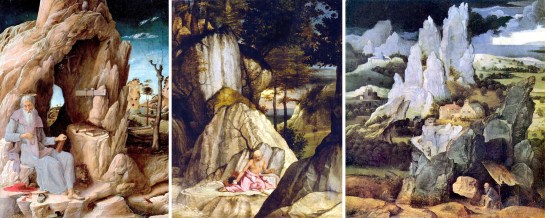

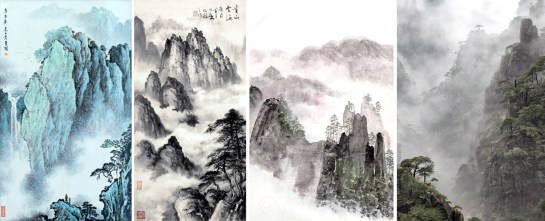

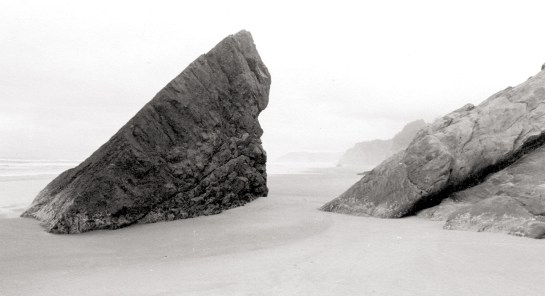

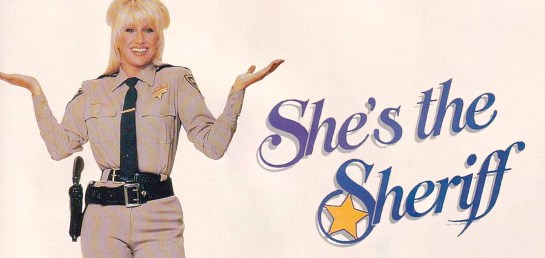

Chaos 1

It is important to note that the “point” did not expand into a great big empty nothingness but rather something and nothing together expanded — and they keep expanding, even as we sit sipping our tea and watching Big Bang Theory in endless reruns on TV. There is math to show this, but you wouldn’t understand it. I certainly don’t. It’s complicated.

“Alice laughed. ‘There’s no use trying,’ she said. ‘One can’t believe impossible things.’

“‘I daresay you haven’t had much practice,’ said the Queen. ‘When I was your age, I always did it for half-an-hour a day. Why, sometimes I’ve believed as many as six impossible things before breakfast.’”

As it says in the Tao Te Ching, “Thus something and nothing produce each other.”

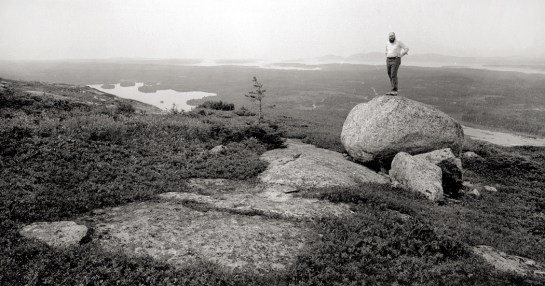

Now, it is 13.799 billion years later, and the universe is still expanding, ever faster and faster. And we are riding on one meager little mote in that great soup, called the planet Earth. It is something. Now, “nothing” is what exists between the bits of “something.”

That is our Creation Myth.

By calling it a myth, I am not implying it is not true, or not factual. Myth does not mean something is untrue, but means it is our way of comprehending what is beyond our actual understanding.

Myth is our explanation to ourselves of something. It may be factual, it may be fantastical. It may be taken literally or it may be understood as metaphor. Either way, it is an approach to the comprehension of something too complex to be held in the mind any other way.

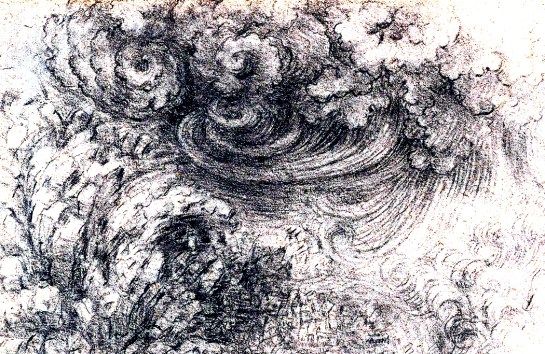

Chaos 2

A physicist may be able to put the math together and parse out the myth in non-mythic terms (I use the word “may” advisedly), but for the rest of us, we take it on faith that our creation myth is scientifically verifiable and therefore, factual. It is the myth we believe in, i.e., the story we take as true. (That it is true is irrelevant to its function as myth).

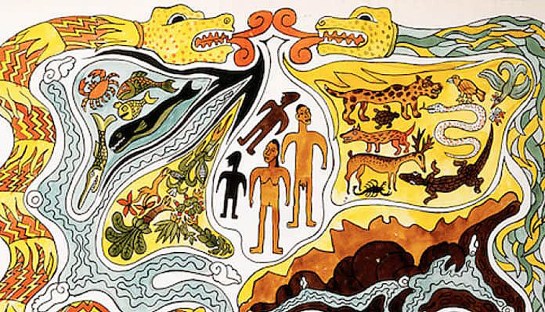

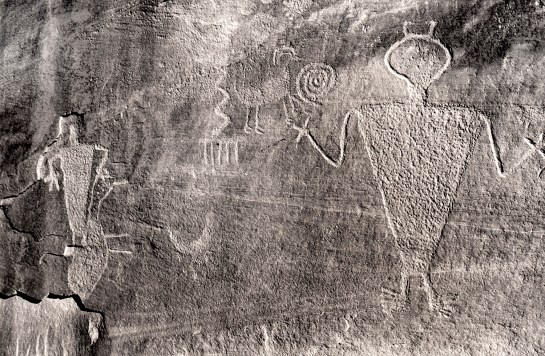

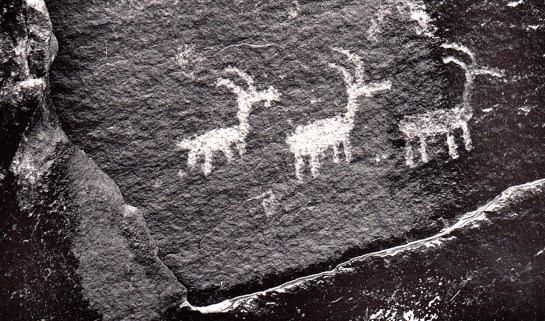

We mistakenly tend to look on myth as something from the past: Zeus or Achilles, or Odin, or Indra fighting Vritra, or Quetzalcoatl, or the Chinese dragon. It is something we condescend to, having learned better. We know that thunder isn’t clouds crashing together. But such an attitude misunderstands myth and its function. We all live by myth, even now.

Chaos 3

There are things we do not or cannot understand. Either too complicated to grasp or just plain unknowable. We need a metaphor to help us come to grips with such things. Language cannot describe such things with the precision of a dictionary, but rather it has to fall back on not “what it is,” but “what is it like.” We tell a story.

The Big Bang is our story. When we assume our superiority, we fail to understand that for most of us, we are relying on the argument from authority no less than the Middle Ages did. We must accept that the physicist knows what we merely accept. (I am making the assumption that a physicist has a more complete understanding than even an educated lay person).

And since we cannot know every corner of relativity or quantum mechanics, we simplify it all into a comprehensible story. The Big Bang.

Chaos 4

I am not claiming what science has parsed out is false, but that our understanding as non-scientists is a mythological understanding, not a literal one. And for that matter, I doubt any scientist is equally conversant in all aspects of the field — relativity, quantum theory, the math and the particle physics. Perhaps he or she has a good grasp on black holes, but how much has he or she published on quarks with spin? Specialization is necessary for modern science, and even a scientist has to rely on and trust the work of others.

And it is important to remember that not all scientists agree. The popular version of the Big Bang is certainly wrong, or at the very least wildly simplified. New theories are always coming forth. No version is entirely consistent and coherent, not even the Bible’s.

Chaos 5

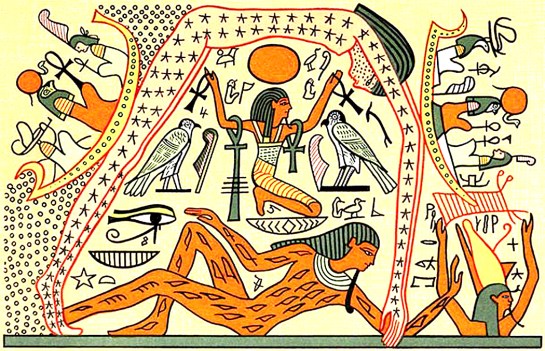

All of which takes me off point: Creation myth. There are so many of them, from the Chinese cosmic egg to the Mesopotamian butchery of the sea goddess Tiamat. The one we in the West are most familiar with is that of Genesis.

“In the beginning God created the heaven and the earth. And the earth was without form and void; and darkness was upon the face of the deep. And the Spirit of God moved upon the face of the waters. And God said, Let there be light.”

We are so used to the organ tones of the King James translation that sometimes putting it into modern English takes away some of the majesty.

“When God began creating the sky and earth, the earth was formless and empty.”

Chaos 6

There are many believers who take this story literally, just as most of us take the Big Bang. For most of us, the Bible story is a story. So, if we had to stake our lives on it, we would more likely defend physics — even if we were Christian believers — and accept that ancient Middle-Eastern poetry is just that. The King James Genesis is transcendent poetry. But so is our story of the Big Bang.

“Mythology opens the world so that it becomes transparent to something that is beyond speech, beyond words, in short, to what we call transcendence,” said scholar Joseph Campbell.

“The energies of the universe, the energies of life, that come up in the sub-atomic particle displays that science shows us, are operative. They come and go. Where do they come from? Where do they go? Is there a where?”

Which returns us to the Big Bang.

Chaos 7

Physicist Paul Dirac in 1930 imagined a where: Now called the “Dirac Sea,” it is an infinite or unnumbered source of subatomic particles that exist “beneath” our visible world. An electron may pop up anywhere, as quantum physics has shown, and may disappear also. Where they come from, where they go is the Dirac Sea. Using the nautical term is another case of mythology making familiar what cannot be grasped otherwise.

Imagine a billiard table, he said, completely covered in balls, leaving no room. Place another ball on top and it sits there, on top of the rest. But push it down and that forces another ball to pop up elsewhere. We cannot predict where. The one above the rest is the one we see and measure, the rest, below, are the “Dirac Sea,” unavailable for study in the visible universe.

Yes, it’s a story. Most of us would run screaming from the math involved in a more proper explanation.

Chaos 8

“The ultimate ground of being transcends definition, transcends our knowledge,” said Campbell. “When you begin to ask about ultimates, you are asking about something that transcends all the categories of thought, the categories of being and non-being. True, false; these are, as Kant points out in The Critique of Pure Reason, functions of our mode of experience. And all life has to come to us through the esthetic forms of time and space, and the logical ones of the categories of logic, so we think within that frame,” he wrote.

“But what is beyond? Even the word beyond suggests a category of thought. So transcendence is literally transcendent.”

Chaos 9

Vedic mythology has many creation stories, but the one most widely seen has the Brahman, or the ultimate ground of reality, as the source of all. However as it says in the Upanishads, the Brahman is just a word, and already it is a distortion of the ultimate, which is beyond words, beyond category, beyond comprehension.

True “of all knowledge,” Campbell said, that is beyond comprehension. “In the Kena Upanishad, written back in the seventh century BC, it says very clearly, ‘that to which words and thoughts do not reach.’ The tongue has never soiled it with a name. That’s what transcendent means. And the mythological image is always pointing toward transcendence and giving you the sense of riding on this mystery.”

Pillars of Creation

So, we look at the Hubble image of a portion of the Eagle Nebula and have named it “The Pillars of Creation.” It is a transcendent image, and fills most of us with genuine awe. But of course, it is a photograph in false color: It would not look that way if seen by a human eye through a telescope. It is a myth. Again, I am not saying it is not true — even the false color is true in its way — it provides a way to see wavelengths that cannot register in a human eye, but are there nonetheless.

But let us go back again to that bit before “something” and before “nothing” — those pairs of opposites.

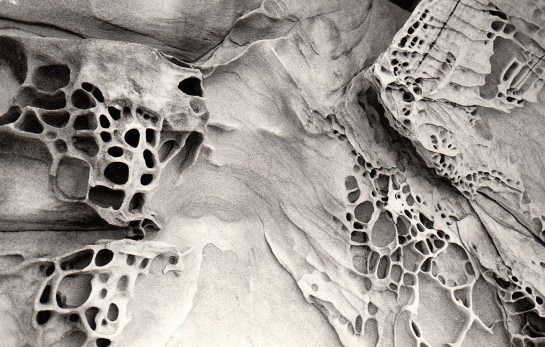

In many recorded myths, before anything, there was Chaos. We should not be fooled by modern science’s version of Chaos Theory. In that, chaos is just something so complex it cannot be predicted by mathematical formula. But mythological Chaos is something else again: It is to order what eternity is to time — which is not simply forever, but rather outside of time altogether.

Likewise Chaos in myth is not a lack of order, but something outside the very idea of order. It is before the organization of “categories of thought,” and cannot be described either in words or algebra.

Chaos 10

The word comes from the Greek χάος meaning “emptiness, vast void, chasm, abyss,” related to the verbs χάσκω and χαίνω “gape, be wide open,” from Proto-Indo-European cognate that gives rise, millennia later, to the English “yawn.”

In Shelley’s Prometheus Unbound, the Spirit of the Hour talks about “The loftiest star of unascended heaven,/ Pinnacled dim in the intense inane.” And here the poet’s unfortunate word choice doesn’t mean insipidity, but rather translates from the Latin word inanis, which meant “empty,” or “void.” And so I like to think of chaos as an intense emptiness.

Chaos 11

My favorite Creation myth is found in the opening of Ovid’s Metamorphoses: “Before the sea was, and the lands, and the sky that hangs over all, the face of Nature showed alike in her whole round, which state have men called chaos: a rough unordered mass of things, nothing at all save lifeless bulk and warring seeds of ill-matched elements heaped in one.”

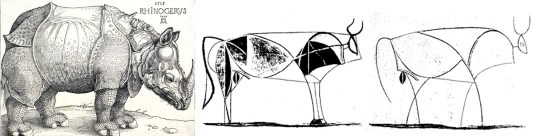

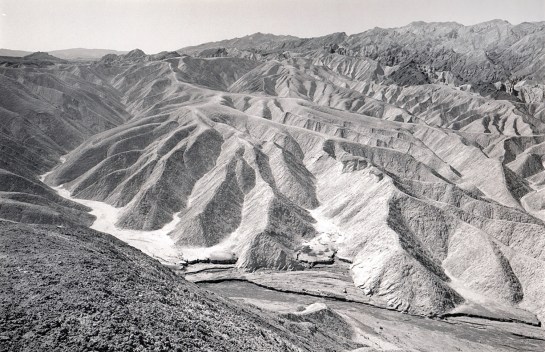

In his De Rerum Natura (“On the Nature of Things”), the Roman writer Lucretius (ca. 99-55 BC) comes very close to both modern astrophysics and to quantum mechanics, although told in mythic terms rather than mathematical formula.

Chaos 12

For Lucretius, the universe has always existed. Nothing can be created from nothing, he wrote, nor can it be destroyed — anticipating the conservation of matter and energy. But the universe originally was an undifferentiated mass of atoms, all traveling in straight lines, he wrote — anticipating Newton’s First Law of Motion — but oddly the atoms had an irrational tendency to “swerve.” This unaccounted divergence of the atoms’ direction led them to bump into each other, to make concentrations of matter in some localities and voids of matter in others — very like the astrophysicists’ explanation of how the cooling of the Big Bang led to unequal distribution of matter in the early universe through gravity and density fluctuations.

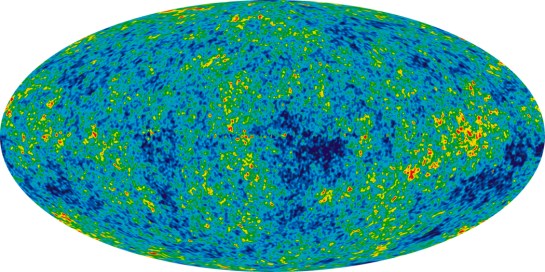

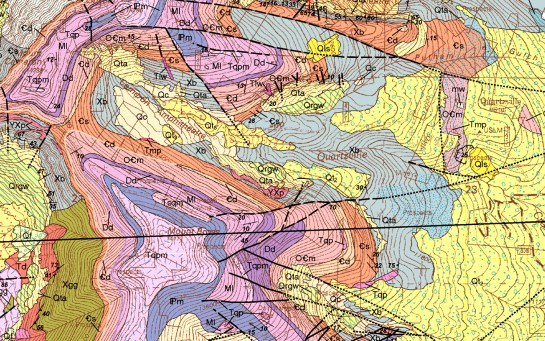

Map of cosmic background microwave radiation

We have an image of this in the map of the cosmic microwave background radiation, discovered by accident in 1965, which supported the Big Bang theory — and Lucretius — with actual data. The two scientists who found this at first thought the data was “noise” in the signal caused by pigeons nesting in the Holmdel Horn Antenna, in New Jersey, where they did their work. Turned out, no, it was evidence of the universe before condensing into protons and electrons.

And so, the Big Bang is now part of the wider public’s sense of where the universe came from. Physicists and cosmologists worry over the many corners that don’t neatly fit, and reams of arcane mathematical formulae are published to support one idea or another. Standard model relativity doesn’t fit, tab-into-slot, with quantum mechanics, and scientists scratch their heads and try new ideas. And they sometimes torture the English language with things like “temperature inhomogeneities,” and torture math with paragraph-long formulas with no actual numbers in them. Looks good on a white board, but it’s Greek to me.

Someday a newer common myth will be created to refine or supplant the version of Big Bang that the educated laymen currently accept. Again, it doesn’t mean the myth isn’t true, but that it is a story that attempts to make the unexplainable facts comprehensible to the puny human mind, which evolved, after all, when we were still banging rocks together. Sometimes it’s like watching a monkey trying to open a jar of pickles.