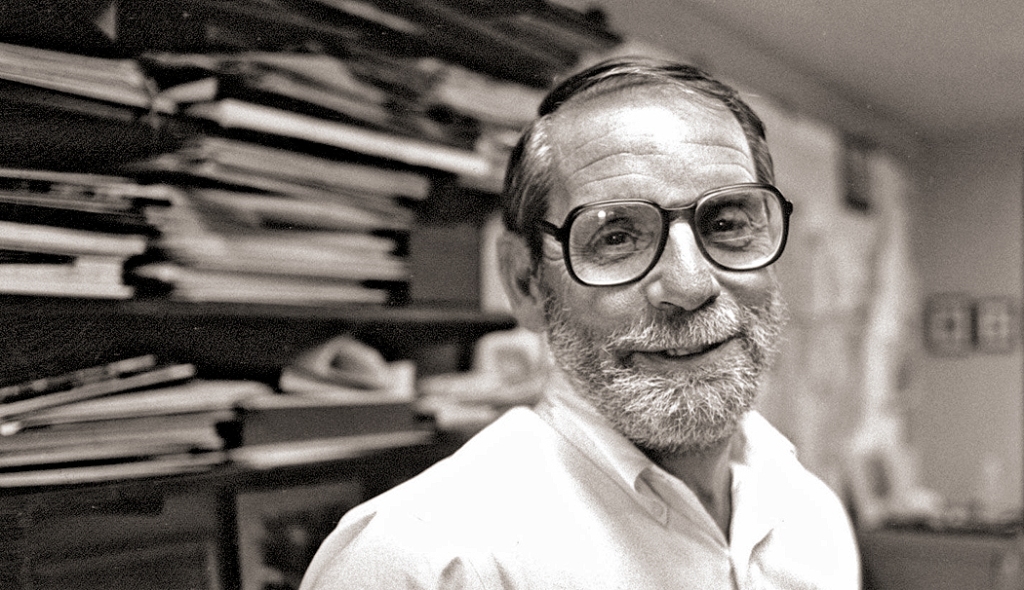

The venerable writer John McPhee wrote a short, episodic memoir for the May 20, 2024 edition of The New Yorker, and in it he discussed proofreading. The piece hit home with me.

I began my career at The Arizona Republic as a copy editor, which is not exactly the same thing as a proofreader, but many of the duties overlap, and many of the headaches are the same.

A proofreader, by and large, works for a book publisher and will double check the galley proofs of a work for typos and grammatical errors. The work has already been typeset and a version has been printed, which is what the proofreader goes over.

A copy editor works for a magazine or newspaper and is usually one of a team of such editors, who read stories before they are typeset and check not only spelling and grammar, but factual material and legal issues, to say nothing of that great bugbear, the math. English majors are not generally the greatest when dealing with statistics, percentages, fractions — or for that matter, addition or subtraction.

Arizona Republic staff, ca. 1990

In a newspaper the size of The Republic, a reporter turns in a story (usually assigned by the section editor) and that editor then reads it through to make sure all the necessary parts are included, and that the presentation flows in a sensible manner. Section editors are very busy people, dealing with personnel issues (reporters can be quite prissy); planning issues (what will we write about on July 4 this year); remembering what has been covered in the past, so we don’t duplicate what has been done; dealing with upper management (most of whom have never actually worked as reporters) and who have “ideas” that are often goofy and unworkable; and, god help them, they attend meetings. They cannot waste their time over tiny details. Bigger fish to fry.

Once the section editor has OKed a piece it goes on to the copy editors, those troglodyte minions hunched over their desks, who then nitpick the story, not only for spelling and style — the Associated Press stylebook can be quite idiosyncratic and counterintuitive — but also for missing bits or mis-used vocabulary, and double-checking names and addresses. A copy editor is a rare beast, expected to know not only how to spell “accommodate,” but also who succeeded Charles V in the Holy Roman Empire (Ferdinand I, by the way).

The copy editor then hands the story over to the Slot. (I love the more arcane features of any specialized vocation). The Slot is the boss of the copy desk. In the old days, before computers, copy editors traditionally sat around the edge of a circular or oblong desk with a “slot” in the center where the head copy editor sat, collecting the stories from the ring of hobbits surrounding him. He gave the stories a final read-through, catching anything the previous readers may have missed. Later the story would be given a headline by a copy editor and that headline given a final OK by the Slot. Only then would the story be typeset.

That means a typical newspaper story is read at least four times before it is printed. Nevertheless, there will always be mistakes. Consider The New York Times. Every typo that gets through generates angry letters-to-the-editor demanding “Don’t you people have proof-readers?” Well, we have copy editors. And why don’t you try to publish a newspaper every day with more words in it than the Bible and see how “perfect” you are? Typos happen. “The best laid schemes o’ Mice an’ Men Gang aft agley.”

When I was first hired as a troglodyte minion, I had no experience in journalism (or very little, having spent time in the trenches of a weekly Black newspaper in Greensboro, N.C., which was a very different experience from a big-city daily) and didn’t fully understand what my job entailed. I thought I was supposed to make a reporter’s writing better, and so I habitually re-wrote stories, often shifting paragraphs around wholesale, altering words and word order, and cutting superfluous verbiage. That I wasn’t caught earlier and corrected tells me I must have been making the stories better.

There was one particular movie critic who had some serious difficulty with her mother tongue and wrote long, run-on sentences, some of which may have been missing verbs in them, or full of unsupported claims easily debunked. (I hear an echo of her style in the speeches of Donald Trump). I regularly rewrote her movie reviews from top to bottom, attempting to make English out of them.

One day, I was a bit fed up, and e-messaged the section editor that the critic’s review was gibberish and I included the phrase, “typewriters of the gods.” Unfortunately the reviewer was standing over the desk of the section editor and saw my sarcastic description and became outraged. I had to apologize to the movie critic and stop rewriting her work.

Lucky for me, the fact that I could make stories better brought me to the attention of the section chiefs and I was promoted off the copy desk and into a position as a writer — specifically, I became the art critic (and travel writer, and dance critic and architecture critic, and classical music critic, and anything else I thought of). I’m sure the other copy editors and the Slot were delighted to see the back of me.

That is, until they had to tackle copy editing my stories. I had a few idiosyncrasies of my own.

Here I must make a distinction between a reporter and a writer. I was never a reporter, and was never very good at that part of the job. Reporters are interested primarily in collecting information and fact. Some of them can write a coherent sentence, but that is definitely subordinate to their ability to ferret out essential facts and relate them to other facts. A reporter who is also a good writer is a wonder to behold. (In the famous team of Carl Bernstein and Bob Woodward, the latter was a great reporter and mediocre wordsmith — as his later books demonstrate. Bernstein was a stylish writer. Together they functioned as a whole).

I was, however, a writer, which meant that my primary talent and purpose was to put words into an order that was pleasant to read. I love words. From the second grade on, I collected a vocabulary at least twice as large as the average literate reader, and what is more, I loved to employ that vocabulary. Words, words, words.

And so, when my stories passed through the section editor and got to the copy desk, the minions were oft perplexed by what I had written. Not that their vocabularies were any smaller than mine, but that such words were hardly ever printed in a newspaper. I once used “paradiddle” in a review and the signal went up from the copy desk to the section editor, who came to me. We hashed it out. I proved to her that the word was, indeed, in the dictionary, and the word descended back down the food chain to the copy desk and the word was let alone.

But this led to a bit of a prank on my part. For a period of about six months (I don’t remember too clearly exactly, back in the Pleistocene when this occurred) I included at least one made-up word in every story I wrote. It was a little game we played. These words were always understandable in context, and were often something onomatopoetic and meant to be mildly comic (“He went kerflurfing around,” or “She tried to swallow what he said, but ended up gaggifying on the obvious lies”). For those six months, a compliant copy desk let me get away with every one of them. Every. Single. One. Copy editors, despite their terrifying reputation, can be flexible. Or at least they threw up their hands and got on to more important matters.

I will be forever grateful to my editors, who basically let me get away with murder, and the copy desk at The Arizona Republic, for allowing me to write the way I wanted (and pretty much the only way I knew how). Editors, of both stripes, will always be my heroes.

John McPhee

Back to John McPhee. He describes the difficulty of spotting typos. Of course most are easily caught. But often the eye scans over familiar phrases so quickly that mistakes become invisible. In a recent blog, I wrote about Salman Rushdie’s newest book, Knife, and I had its subtitle as “Meditations After and Attempted Murder.” I reread my blog entries at least three times before posting them, in order to catch those little buggers that attempt to sneak through. But I missed the “and” apparently because, as part of a phrase that we use many times a day, the eye reads the shape of the phrase rather than the individual words and letters.

There is a common saying amongst writers: “Everyone needs a copy editor,” and when I retired from The Republic, I lost the use, aid, and salvation of a copy desk. I had to rely on myself and my re- and re-reading my copy. But typos still get through. And on the day after I post something new, I will sometimes get an e-mail from my sister-in-law pointing out a goof. She let me know about my Rushdie “and,” and I went back into the text and corrected it (something not possible after a newspaper is printed and delivered). She has saved my mistakes many, many times, and has become my de-facto copy editor.

But my training as both writer and copy editor have stood me well. Unlike so many other blog posters, I double check all name spellings and addresses, my math and my facts. I am quite punctilious about grammar and usage. And even though it is no longer required, I am so used to having AP style drilled into me, I tend to fall in line like an obedient recruit.

In his story, McPhee details trouble he has had with book covers that sometimes misrepresented his content. And that hit me right in the whammy. One of the worst experiences I ever had with the management class came when I went to South Africa in the 1980s. Apartheid there was beginning to falter, but was still the law. I noticed that racial laws were taken very seriously in the Afrikaner portions of the country, but quite relaxed in the English-speaking sections.

And I wrote a long cover piece for the Sunday editorial section of the paper about the character of the Afrikaner and the racial tensions I found in Pretoria, Johannesburg and Cape Town. The Afrikaner tended to be bull-headed, bigoted and unreflective. And I wrote my piece about that (and the fascist uniformed storm troopers that I witnessed threaten the customers at a bar in Nelspruit). The difference between the northern and eastern half of South Africa and its southern and western half, was like two different countries.

As I was leaving the office on Friday evening, I saw the layout for the section cover and my story, and the editor had found the perfect illustration for my story — a large belly-proud Afrikaner farmer standing behind his plow, wiping the sweat from his brow and looking self-satisfied and as unmovable as a Green Bay defensive tackle. No one was going to tell him what to do. “Great,” I thought. “Perfect image.”

But when I got my paper on Sunday, the photo wasn’t there, being replaced by a large Black woman waiting dejectedly at a bus station, with her baggage and duffle. My heart sank.

When I got back to the office on Monday, I asked. “Howard,” was the reply. Earlier in this blog, I mentioned management, with which the writer class is in never-ending enmity. Howard Finberg had been brought to the paper to oversee a redesign of The Republic’s look — its typeface choices, its column width, its use of photos and its logos — and somehow managed to weasel his way permanently into the power structure. He was one of those alpha-males who will throw his weight around even when he doesn’t know or understand diddly. I will never forgive him.

He had seen the layout of my story and decided that the big Afrikaner, as white as any redneck, simply “didn’t say Africa.” And so he found the old Black woman that he thought would convey the sense of the continent. Never mind that my story was particularly about white South Africa. Never mind that he hadn’t taken the time to actually read the story. That kind of superficial marketing mentality always drives me nuts, but it ruined a perfectly good page for me. Did I say, I will never forgive him?

It reminds me of one more thing about management. In the early 2000s, when The Republic had been taken over by the Gannett newspaper chain, management posted all over our office, on all floors, a “mission statement.” It was written in pure managament-ese (which I call “manglish”) and was so diffuse and meaningless, full of “synergies” and “goals” and “leverage” that I said, “If I wrote like that, I’d be out of a job.”

How can those in charge of journalism be so out of touch with the language which is a newspaper’s bread and butter?

These people live in a very different world — a different planet — from you and me. I imagine them, sent by Douglas Adams, on the space ship, packed off with the phone sanitizers, management consultants, and marketing executives, sent to a tiny forgotten corner of the universe where they can do less harm.

One final indignity they have perpetrated: They have eliminated copy editors as an unnecessary cost. When I retired from the newspaper, reporters were asked to show their work to another writer and have them check the work. A profession is dying and the lights are winking out all over Journalandia.

Click on any image to enlarge