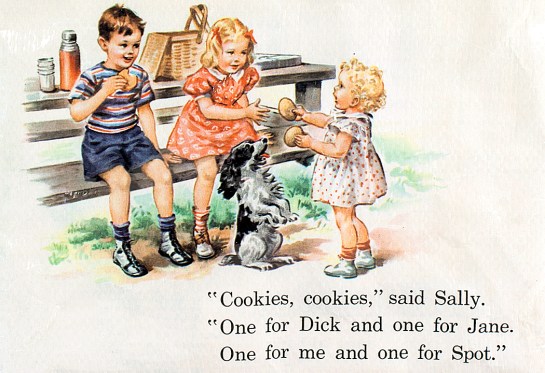

I have been a reader for about three quarters of a century. It began so long ago, I don’t remember exactly when I started — probably in kindergarten when I discovered words. But once I began, I couldn’t get enough.

I read anything I could get my mitts on, from the backs of cereal boxes to the funny pages in the newspaper to road signs when the family was out driving. I’m sure I drove my parents nuts by pointing out stop signs and mileage markers.

This is not to claim any sort of prodigy. I believe most kids latch on to text as soon as they learned to decipher it.

But lately, I’ve been thinking about how my reading habits have changed over the years, and how those changes parallel life experience, and the needs I sought to satisfy as I grew up. Shakespeare wrote about the seven ages of life, and I think about the stages of reading.

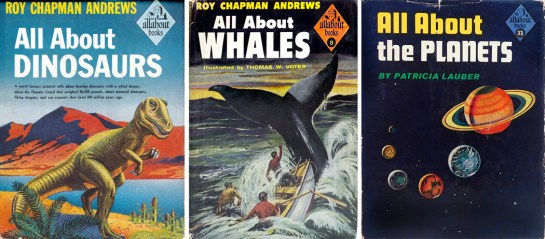

My early reading is chronicled by years. In third grade, I was fascinated with dinosaurs and read all the books I could find in my elementary school library (which was also the town library). There was Roy Chapman Andrews and his All About Dinosaurs, part of a series of “All About” books written for kids.

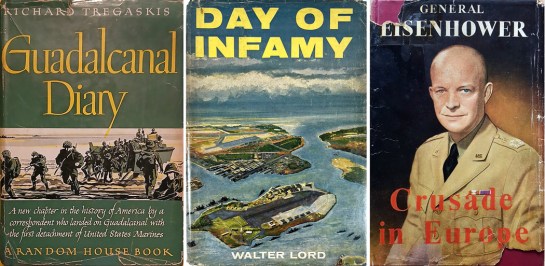

By the fourth grade, I had moved on to whales, and spent the school year absorbing everything I could about cetaceans. And so it progressed through astronomy, airplanes, and, by the seventh grade, I was into World War II. By then I had left behind the books written for young readers and took on the history books and memoirs. Guadalcanal Diary by Richard Tregaskis; Day of Infamy by Walter Lord; Crusade in Europe by Dwight Eisenhower.

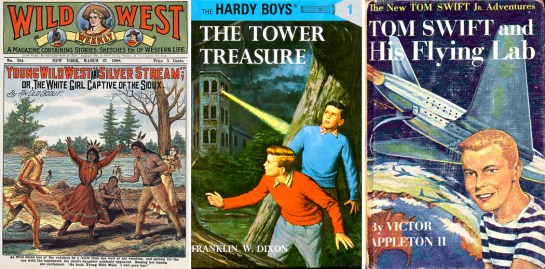

Other boys my age were reading Hardy Boys books and maybe Sherlock Holmes, but I was single-minded in reading non-fiction. I wanted to learn everything. I was a compendious fact basket. Dump it all in.

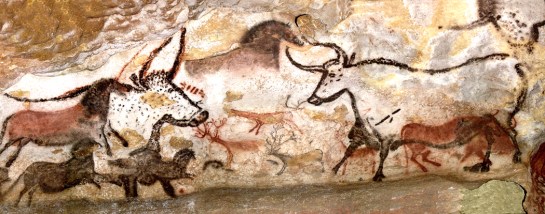

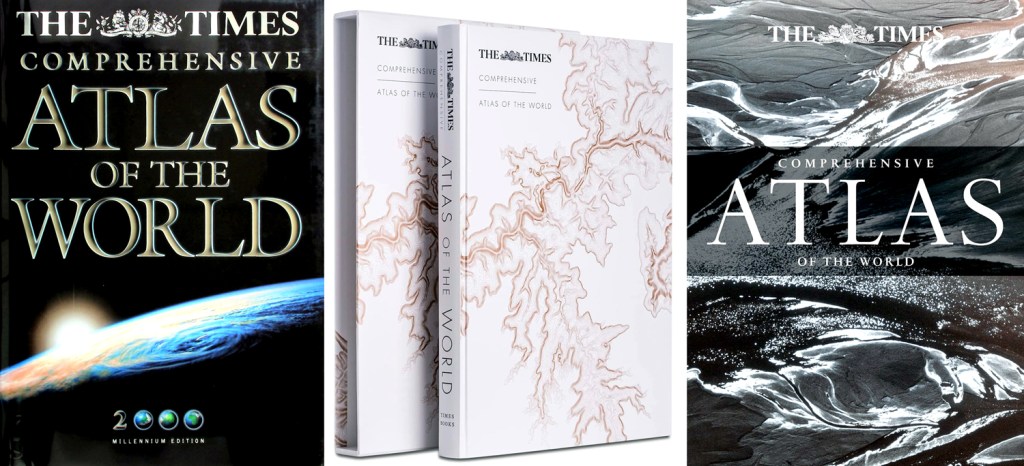

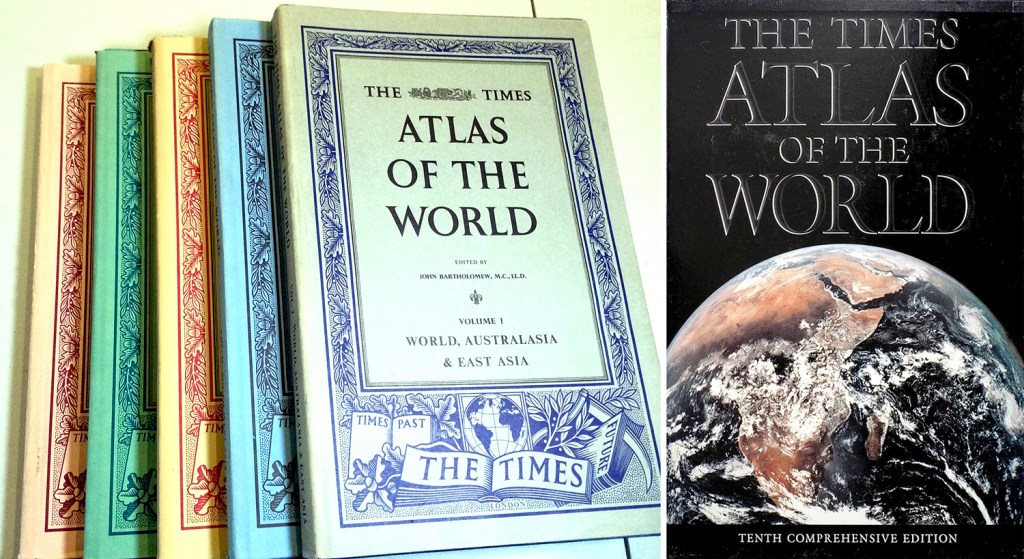

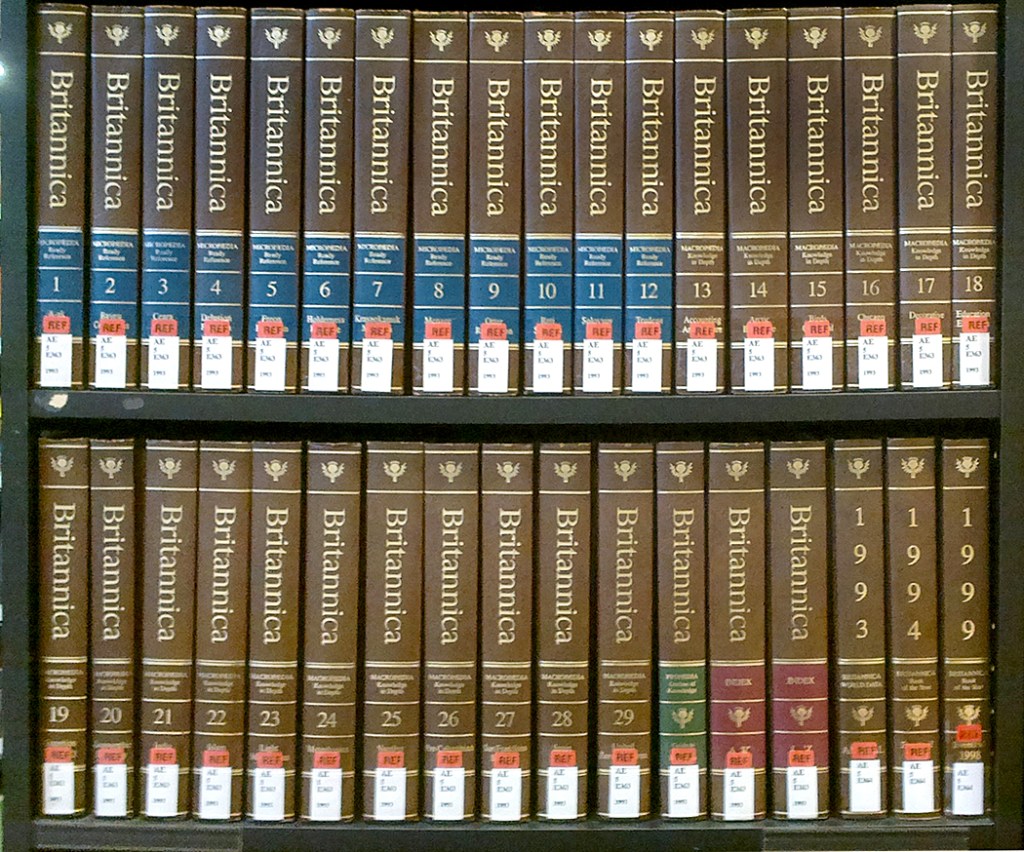

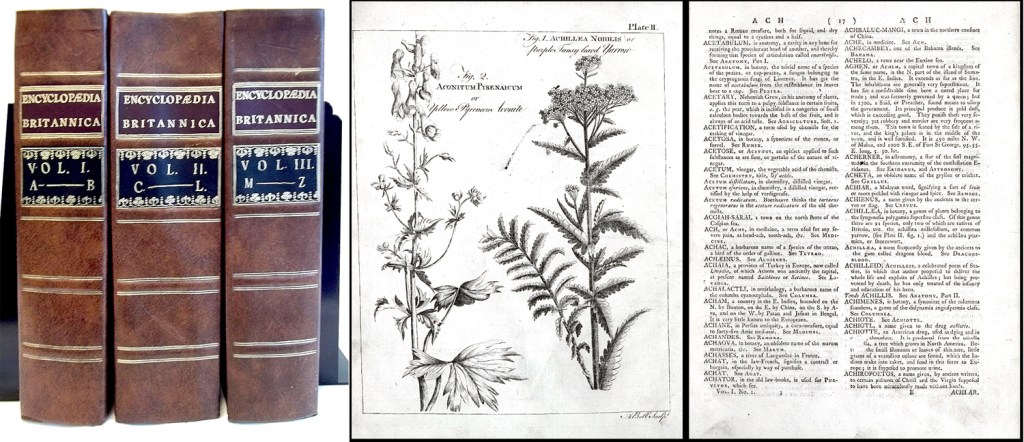

We were given a 1930s copy of the Compton’s Illustrated Encyclopedia and I read through it constantly. It might have been out of date, but it had lovely drawings of autogiros and streamlined trains and learned about everything from Angkor Wat to frog-egg fertilization and The Great War.

My parents, who had just high school educations and had lived through the Great Depression, were eager to educate their children to guarantee them good jobs in life. There was never any doubt they would send their three sons to college. And so, they encouraged this reading.

They thought they would help by buying books for me, including a copy of some young adult fiction. I sneered at it. Fiction? “I don’t want to read anything that isn’t true,” I said.

This first phase was the fact-gathering stage. I wanted to learn as much as possible and reading was the funnel that poured it all into my hungry brain. You have to fill the pepper grinder before you can grind pepper.

And so, it poured in and stuck: 44 B.C.; A.D. 1066; 1215; 1588; 1776; 1914 — and Aug. 6, 1945, a date that hangs over anyone my age like a threatening sky.

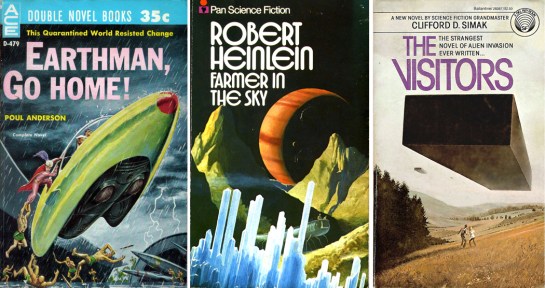

I stayed away from fiction until, I was, perhaps, 12 or 13 years old, when I discovered science fiction. I gobbled it up like candy. All the mid-level sci-fi writers that were popular in the late ’50s and early ’60s: Poul Anderson: Lester del Rey; Robert Heinlein; Frederick Pohl; and, of course, Ray Bradbury. I must have burned out on it, because I cannot read any fantasy or sci-fi anymore. Lord of the Rings? God help me. No, never.

But there were the Fu Manchu books of Sax Rohmer, with their Yellow Peril and archly Victorian prose. All pure pulp, but they opened the world of fiction to me and transitioned me into the second phase of readership, which is perhaps the most embarrassing phase.

By high school, I wanted to prove I was a grown-up, and what is more, an intellectual grown up. I subscribed to Evergreen Review and Paul Krassner’s Realist. I was hip, in my tight jeans, pointy shoes and hair slicked with Wildroot Cream Oil.

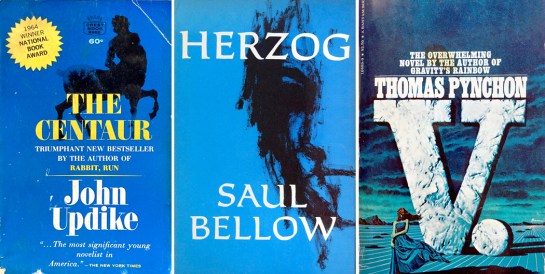

And I began reading important fiction of the time: Saul Bellow; John Updike; Norman Mailer; Thomas Pynchon. Of course, I didn’t understand any of them. I was a pimply-faced high-school kid. What did I know? But I looked oh, so sophisticated carrying around Herzog under my arm. It was only when I read it again a few years ago that I fully realized how funny the book is. I didn’t know it was a comedy when I first read it. There is nothing more earnest than a teenager.

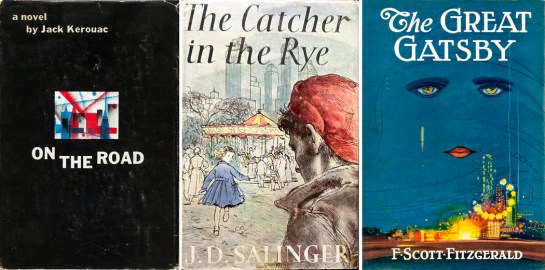

I did manage to pick up and understand On the Road and other Kerouac books, and J.D. Salinger spoke directly to my adolescent soul. On the Road held up on re-reading years later; not Catcher in the Rye, which is now close to unreadable. I couldn’t even finish a re-read.

The problem with adolescence is you have almost no life experience. It’s all just literature to you. You get caught up in “symbolism,” and what a book “means.” As if it were written in code to be deciphered. A 16-year-old is in no position to know what was going on in those books. It wasn’t much different from when we were assigned The Scarlet Letter or The Great Gatsby in eighth grade. I could understand the words, but not understand what was said between the lines.

A teenager thinks the world is simple. It is the tragedy of youth.

The following stage was college reading. There was, of course, a lot of it. But I loved it, especially the Classics courses I took, reading Greek and Roman authors, the English Romantic poets, and Chaucer. I took to Chaucer immediately. I still read him for the pleasure of his language. There was a course on Milton and another on William Blake. I ate the stuff up. I still do.

And I was then old enough that I actually understood much of what I was reading. I was beginning to have a life and feel the complexity of the world.

College only lasted four years and after that came marriage and divorce and unemployment and — just as bad — employment. I still read, but mostly I just tried to keep it all together, and not always successfully. (Unemployment gave me a lot of reading time and for months, I managed a book a day.)

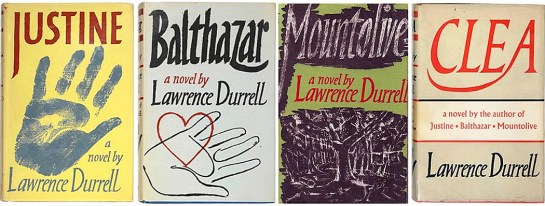

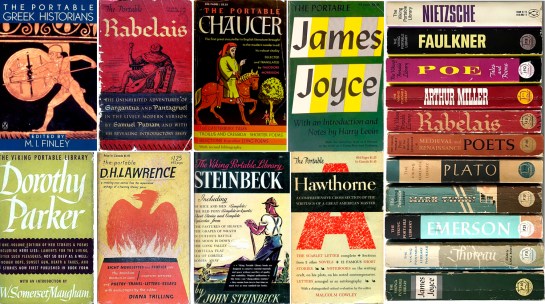

The fourth great phase of reading came after divorce and other traumatic events, when I had what might now be called a break down. It didn’t stop me reading, and actually it led to a fever of it. I read all of Henry Miller (or as much as was available, not counting his privately printed short works); all of Melville, or pretty near; piles of D.H. Lawrence; and basket-loads of Twain, Hemingway and Faulkner — and Lawrence Durrell’s Alexandria Quartet, which seemed to sum up my situation — and that marked a change in my relationship to books.

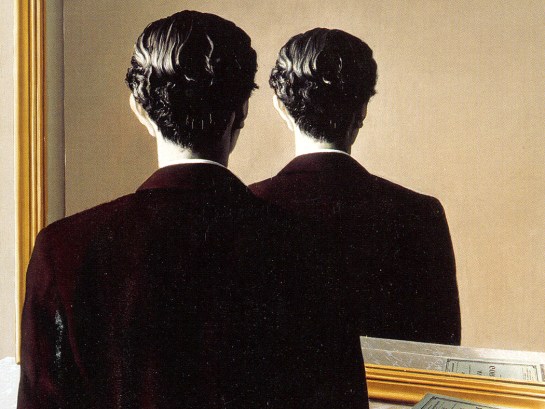

This was a turning point that not everyone seems to experience. Until this point, I measured my life against what I read. Was I like Nick Carraway or Sal Paradise? I wanted to be “important” like them and thought literature was there to show me the way.

After that point, instead of measuring my life against the books, I measured them against my life. Did they seem true to what I had experienced? When I was young, the books were real and my life a simulacrum; as I grew, it became clear my life was the real thing and the literature was only a reflection. Not “did I measure up,” but “did the books measure up?”

Books, as a percentage of time spent, varies quite a bit over the course of a life. I find the more I am engaged with doing something, like work or socializing, the less time I spend reading. And the fifth phase began when I started teaching in Virginia. It wasn’t that I stopped reading, but that I did less of it, and that the books I did read tended more toward histories, biographies, memoirs and essays.

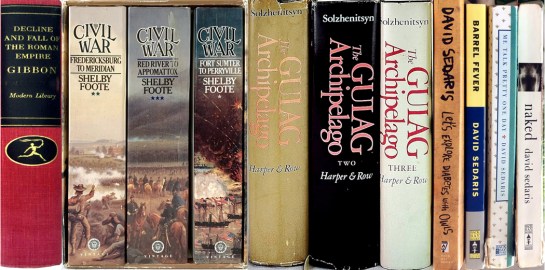

I tore through Shelby Foote’s three-volume history of the Civil War; a one-volume selection from Edward Gibbon’s Decline and Fall of the Roman Empire; tons of H.L. Mencken, including all his Prejudices; Solzhenitsyn’s Gulag Archipelago; and as antidote, all the David Sedaris I could find.

This phase continued through 25 years working for The Arizona Republic, in Phoenix, where I was, among other things, the art critic. I read a lot for work — including background on things I was writing about.

The relationship with books had changed, but so had my relationship with words altogether. When I was young, I was eager for knowledge and read by the boatload in search of ways to fill my insides. But somewhere along the line the well was full and the words spent less time piling in and more gushing out: I became a professional writer and all that back-pressure of words, information and experience burst out. I wrote like a hose spraying water. I couldn’t fill the buckets fast enough.

Even on vacations, driving around this country or others, I spent part of every day writing down notes that later turned into stories for the paper.

So, there were those two major shifts in life: first when I began measuring the books against my life; and second when instead of just adding more words to the pile, all the millions of words dammed up behind my cranium began pouring out.

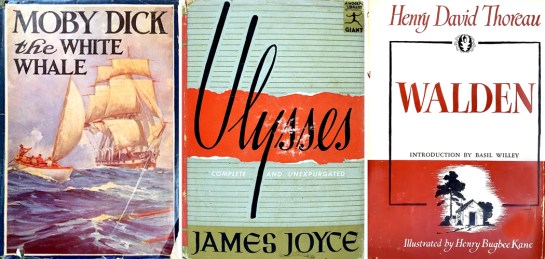

Through all of these phases, but increasingly over the years, I found myself re-reading as much as reading. My favorite books I tackled again and again. I cannot number the times I have read Moby Dick or Paradise Lost or James Joyce’s Ulysses — all dived into time after time for pure pleasure. There are others: Thoreau’s Walden; Hemingway’s Sun Also Rises; Gatsby, of course, now that I know what’s going on in it.

I try to re-read the Iliad once a year, usually with a different translation each time. I sometimes change pace and do the Odyssey. I tried Dante, and can gobble up Inferno any time, but admit I find Purgatorio and Paradiso quite a slog. I’ll stick with Hell, thank you.

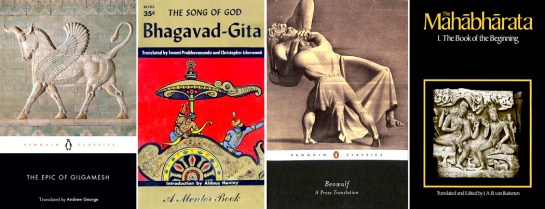

The books I’ve mentioned so far indicate a fairly parochial taste for Western culture, and I have to admit to that. But I’ve soaked up a fair amount of early and non-Western writings to try to keep some balance. I’ve read and re-read the Epic of Gilgamesh, the Mahabharata, Lao-Tze, Beowulf, and Basho’s Narrow Road to the Deep North, among others. I’ve read large swaths of the Vedas and Upanishads, and even a bit of various versions of the Book of the Dead. There is Sufi poetry and the Popol Vuh. I have tried to soften the boundaries of my cultural walls.

There is a sixth phase, where I am now — retired. Although, as I’ve said many times, a writer never really retires. He just stops getting paid for it. I now write this blog, for which I’ve written more than 800 entries with another hundred more for other online venues, all of which total a million and a half words, all since 2012 when I left the madding crowd behind.

I have slowed down some, but I still read. Now, I read a good deal of poetry. I find it satisfies my love not just of meaning but of the words themselves, the taste and mouth-feel of them. It is a connection with the world, and with the netting of language that holds that world up before my mind.

It is said in some cultures, after a life of striving and ambition, that there comes a time of quiet and reflection, a time to spend on weighing the life and figuring out where it falls in the larger picture of time and the universe.

I certainly feel that. But it hasn’t reduced my need to recharge that well from which I draw my words. My Amazon account proves that. I keep getting new books and find that I simply don’t have any more space on my bookshelves, and so, they pile up on top of other books and in dangerous stacks on the floor.

Every once in a while, there is a book-avalanche and under the chaos I rediscover some book I had forgot and sit down and open it up again. I’ll deal with the mess later.